Get ready for an uncomfortable board meeting. As companies race to implement AI initiatives, a stark reality is emerging: your department’s content infrastructure could be the hidden liability that derails it all.

According to RAND, 80% of AI investments fail, often due to poorly organized internal data and content. It’s not just about selecting the right technology. The success of your company’s multi-million dollar AI initiatives hinges on the quality and structure of your content.

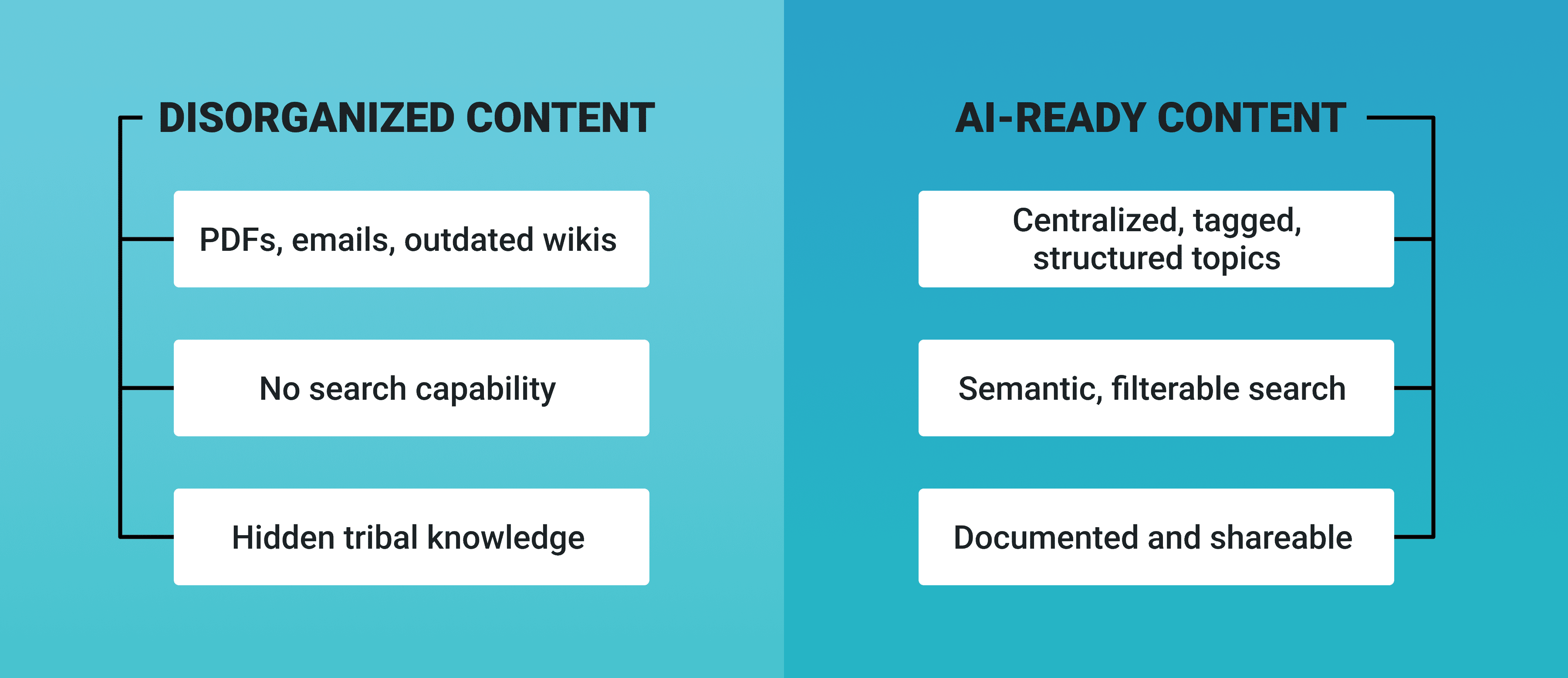

AI implementation follows the principle of garbage in, garbage out (GIGO). If the content and data your department contributes lacks structure or proper tagging, AI models and algorithms can’t learn effectively and will return subpar output.

What's Keeping Directors Awake at Night?

With 79% of executives expecting generative AI to transform their organizations within three years (Deloitte, 2024), the pressure is mounting. Leaders across industries are losing sleep over five critical threats to AI success:

Content scattered across departmental silos makes AI training impossible

- Inconsistent documentation standards render valuable knowledge unusable

- Outdated information contaminates training data

- Critical content buried in formats AI systems can’t process

- No clear protocols for managing confidential content

Sound familiar? If your stomach just tightened, you’re not alone.

The Content Challenge: A Widening Gap

Even companies seeing success with AI, those reporting 11%+ profit gains (McKinsey & Company, 2024), cite content readiness as their biggest hurdle. Poorly organized internal content remains one of the top reasons AI projects stall or fail.

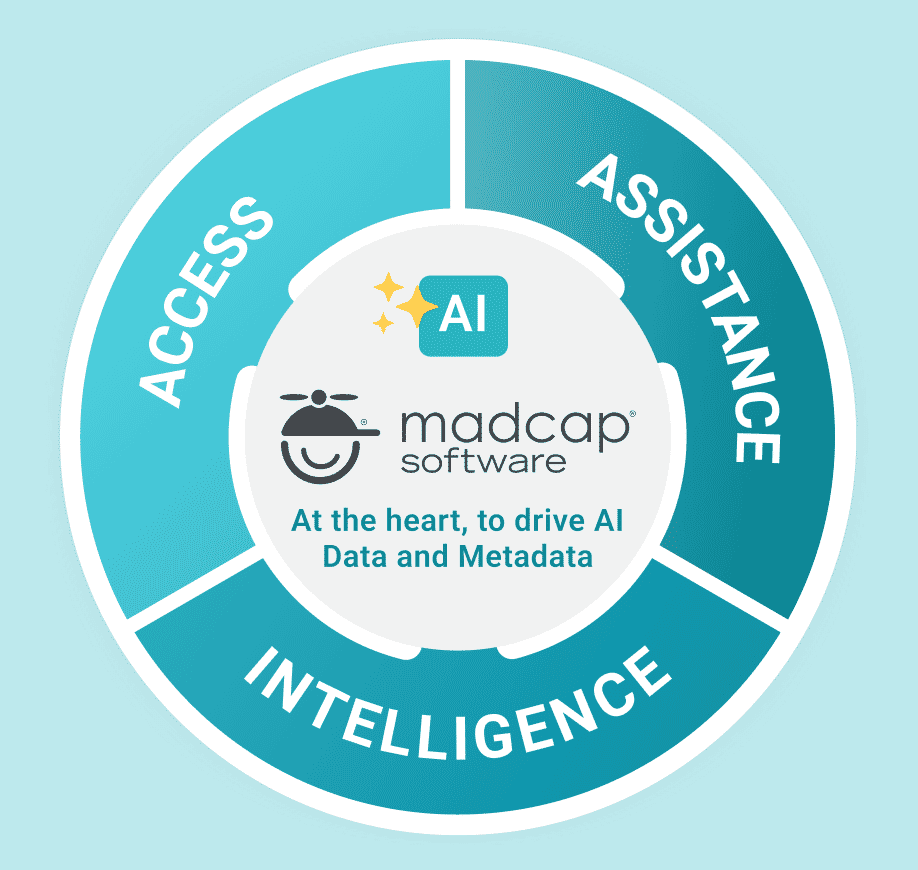

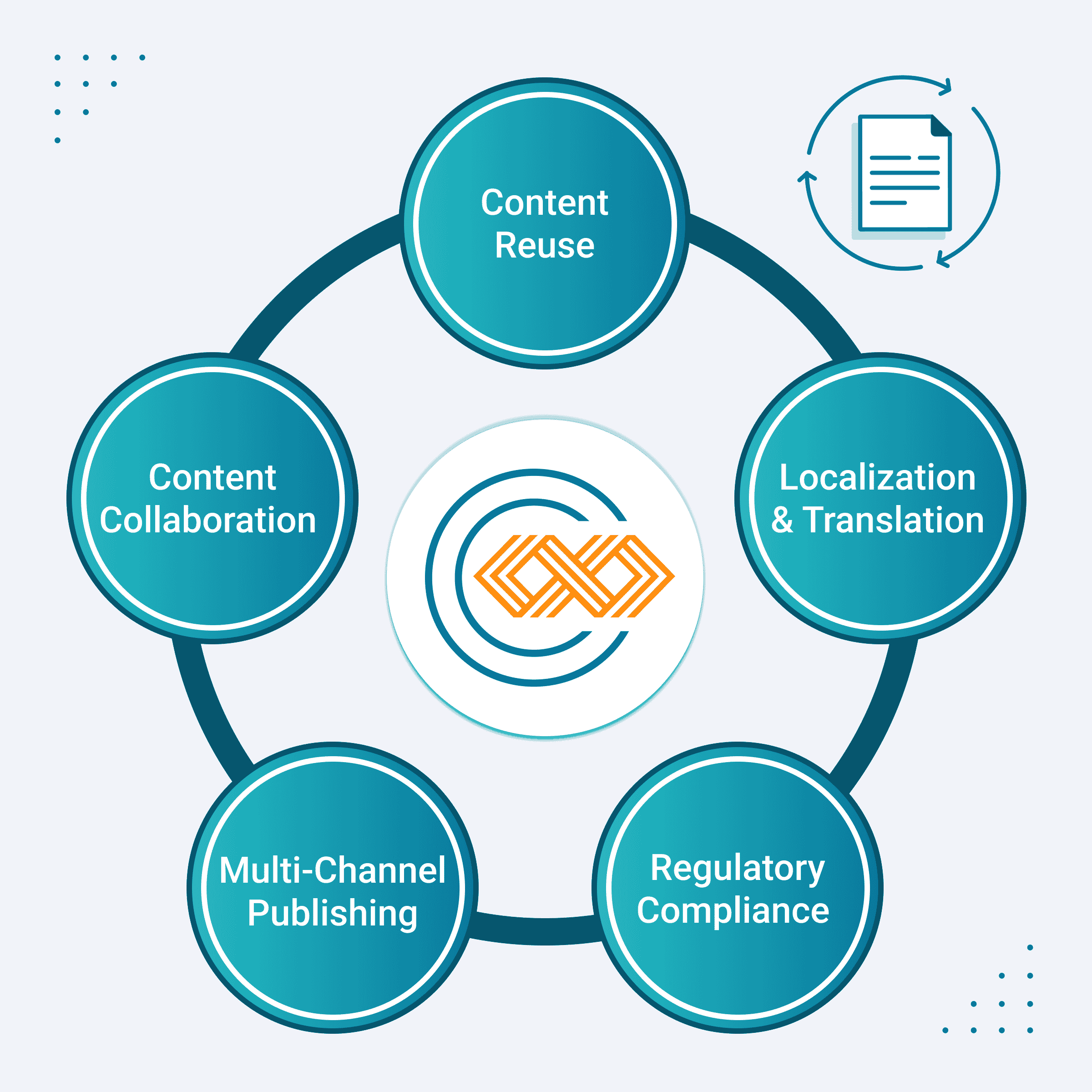

The gap between AI leaders and followers is widening. Organizations with AI-ready content data and systems demonstrate immediate value through efficiency—and long-term competitive advantage through a content infrastructure built for innovation. To enable this, companies are investing in a content delivery and analytics platform to centralize, tag, and structure their knowledge assets, making them accessible and AI-ready.

According to Gartner, companies with robust content infrastructure report 22.6% higher productivity and 15.2% lower operational costs. Each successful AI model deployment widens the gap, leaving behind organizations that still rely on disjointed content systems.

AI’s power lies in its ability to generate insights at scale—but it’s also prone to “hallucinations,” or fabricated, biased, or incorrect output. These model failures are a direct result of the garbage in, garbage out (GIGO) principle. When AI consumes poorly labeled content, inconsistent documentation, or outdated data, it doesn’t just produce neutral mistakes—it fabricates plausible-sounding, but false results that can mislead teams and decision-makers.

Many organizations treat hallucinations as technical model problems. In reality, they are often content problems. A lack of structure, metadata, and human-validated labeling at the source enables flawed inputs to be incorporated into training data, resulting in unreliable outputs.

To reduce hallucination risks, teams must:

- Implement consistent content standards across all departments.

- Use human review in the labeling process to catch early anomalies.

- Tag and structure all input data for context-aware interpretation.

- Regularly audit model results for bias, misinformation, and gaps.

Leveraging technical writing software can streamline documentation, standardize labeling, and ensure your content is structured in ways that support reliable AI output. Remember: the algorithm is only as reliable as the data it consumes. The better your internal content process, the less your organization will suffer from embarrassing or damaging hallucinated output.

Career Consequences

Your team’s ability to support AI isn’t just a tech issue—it’s a leadership issue. When AI initiatives stall, departments with inadequate content management face scrutiny. Teams waste time cleaning data instead of training models. Projects get delayed or even canceled when unusable content is discovered too late, affecting valuable results and performance. Each failure raises uncomfortable questions about departmental leadership.

How to Prevent a Disastrous Outcome

Forward-thinking directors are already focusing on three strategic areas to prepare their teams:

- Infrastructure: Implementing unified content platforms and enforcing clear management protocols.

- Governance: Establishing quality standards and controls for sensitive content and data.

- Training: Developing programs to promote consistent, structured content creation across teams.

Platforms like cloud-based workflow management software are increasingly used to unify processes, enforce protocols, and break down documentation silos across departments.

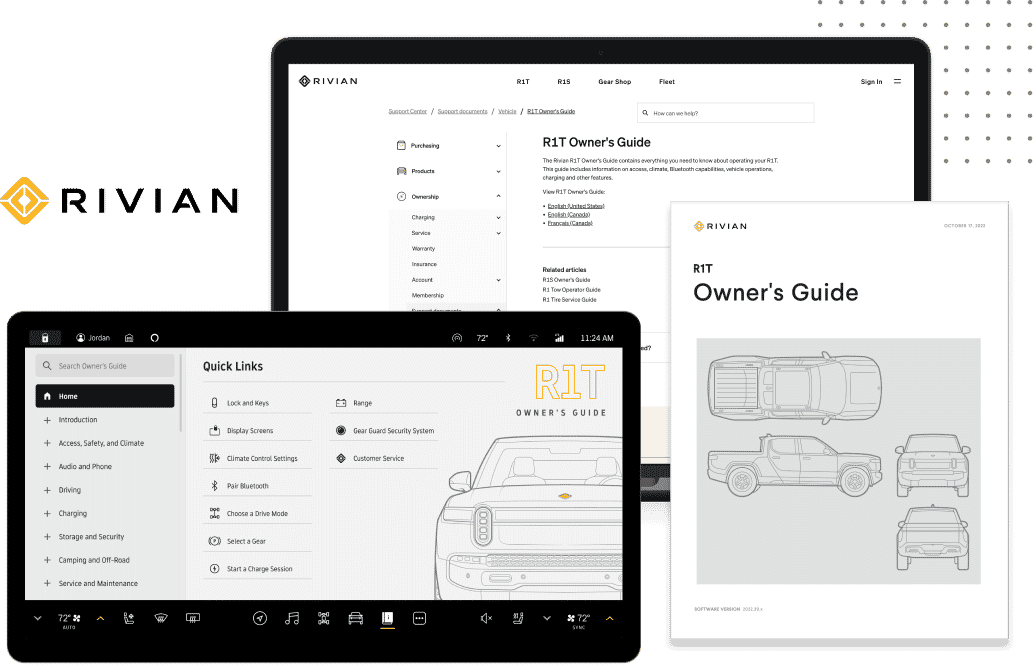

It may feel overwhelming, but solutions exist. MadCap Software partners with organizations serious about getting their content AI-ready. Our approach goes beyond software to deliver a methodical path to transformation.

Build an AI Audit Framework to Lead with Confidence

Without visibility into how content flows into your AI systems, you can’t lead responsibly—or fix what’s broken. That’s where an AI audit framework becomes essential. Unlike traditional IT audits, this process focuses on the content-to-algorithm pipeline: how information is sourced, structured, labeled, and applied to train or inform AI models.

A robust audit framework helps detect:

- Biased or low-quality input data

- Broken labeling processes or inconsistent metadata

- Risks of regulatory non-compliance due to poor data lineage

- Flawed logic in model results or decision outputs

Deploying advanced content authoring software empowers teams to consistently tag, structure, and update content, ensuring your AI audit process starts with high-quality inputs.

Start with a simple but repeatable process:

- Content Mapping – Identify what data your models use, where it lives, and how it’s labeled.

- Validation Checkpoints – Introduce regular reviews of model output against known truth sources.

- Bias Detection – Look for patterns in errors or inconsistencies, especially in human-sensitive categories.

- Documentation Trail – Ensure every step in the data-to-decision process is tracked and accessible.

This isn’t just risk management, it’s a strategic advantage. By operationalizing content transparency and AI accountability, you protect your company, your reputation, and your bottom line.

From Crisis to Control

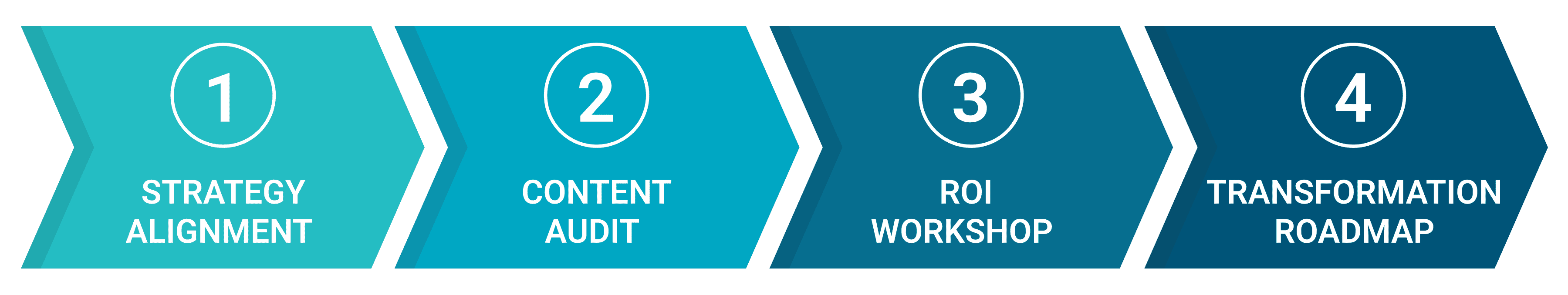

Here’s what the documentation process looks like:

- Executive Strategy Alignment

Start with an executive session where your leadership team and MadCap Software experts align on your vision and priorities. - Content Ecosystem Assessment

We conduct a deep audit of your content systems to uncover inefficiencies and surface opportunities. This helps build a compelling business case that speaks directly to your organization's bottom line. - ROI-focused Business Case

We translate technical findings into financial impact during an ROI workshop with your stakeholders. With a data-backed blueprint in hand, you’ll be able to move forward with confidence in implementing a unified content solution across your organization.

Content infrastructure has evolved from an IT concern into a board-level strategic asset. Soon, content readiness will dominate executive agendas. Will you be the leader who foresaw the challenge—or the one explaining why you didn’t act?

The tools and expertise to solve this challenge exist today. Take the first step. Contact MadCap Software’s enterprise team to begin your content transformation journey.